有關權重如何初始化也是個各派不同的訓練方法,從 tf.keras 官方文檔就看到一大堆的初始化方式,對於開始接觸的人來說,還真是頭痛。

我們知道如果權重分佈變異數大,會容易導致梯度爆炸,相反地,如果權重分佈的變異數小,容易導致梯度消失,模型很難更新,理想上的權重分佈是平均為0,各層的標準差數值差不多,對模型的學習來說是最健康的。

而今天我挑了幾個初始化方式來跟大家介紹,並且會個別訓練模型看看會發生什麼事。

模型用昨天自己打造的 mobilenetV2,並設計了一個 func 方便將初始化方式導入。

def bottleneck(net, filters, out_ch, strides, weight_init, shortcut=True, zero_pad=False):

padding = 'valid' if zero_pad else 'same'

shortcut_net = net

net = tf.keras.layers.Conv2D(filters * 6, 1, use_bias=False, padding='same', kernel_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.ReLU(max_value=6)(net)

if zero_pad:

net = tf.keras.layers.ZeroPadding2D(padding=((0, 1), (0, 1)))(net)

net = tf.keras.layers.DepthwiseConv2D(3, strides=strides, use_bias=False, padding=padding, depthwise_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.ReLU(max_value=6)(net)

net = tf.keras.layers.Conv2D(out_ch, 1, use_bias=False, padding='same', kernel_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

if shortcut:

net = tf.keras.layers.Add()([net, shortcut_net])

return net

def get_mobilenetV2_relu(shape, weight_init):

input_node = tf.keras.layers.Input(shape=shape)

net = tf.keras.layers.Conv2D(32, 3, (2, 2), use_bias=False, padding='same', kernel_initializer=weight_init)(input_node)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.ReLU(max_value=6)(net)

net = tf.keras.layers.DepthwiseConv2D(3, use_bias=False, padding='same', depthwise_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.ReLU(max_value=6)(net)

net = tf.keras.layers.Conv2D(16, 1, use_bias=False, padding='same', kernel_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = bottleneck(net, 16, 24, (2, 2), weight_init, shortcut=False, zero_pad=True) # block_1

net = bottleneck(net, 24, 24, (1, 1), weight_init, shortcut=True) # block_2

net = bottleneck(net, 24, 32, (2, 2), weight_init, shortcut=False, zero_pad=True) # block_3

net = bottleneck(net, 32, 32, (1, 1), weight_init, shortcut=True) # block_4

net = bottleneck(net, 32, 32, (1, 1), weight_init, shortcut=True) # block_5

net = bottleneck(net, 32, 64, (2, 2), weight_init, shortcut=False, zero_pad=True) # block_6

net = bottleneck(net, 64, 64, (1, 1), weight_init, shortcut=True) # block_7

net = bottleneck(net, 64, 64, (1, 1), weight_init, shortcut=True) # block_8

net = bottleneck(net, 64, 64, (1, 1), weight_init, shortcut=True) # block_9

net = bottleneck(net, 64, 96, (1, 1), weight_init, shortcut=False) # block_10

net = bottleneck(net, 96, 96, (1, 1), weight_init, shortcut=True) # block_11

net = bottleneck(net, 96, 96, (1, 1), weight_init, shortcut=True) # block_12

net = bottleneck(net, 96, 160, (2, 2), weight_init, shortcut=False, zero_pad=True) # block_13

net = bottleneck(net, 160, 160, (1, 1), weight_init, shortcut=True) # block_14

net = bottleneck(net, 160, 160, (1, 1), weight_init, shortcut=True) # block_15

net = bottleneck(net, 160, 320, (1, 1), weight_init, shortcut=False) # block_16

net = tf.keras.layers.Conv2D(1280, 1, use_bias=False, padding='same', kernel_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.layers.ReLU(max_value=6)(net)

return input_node, net

由於模型是從頭開始訓練的,資料集使用 cifar10,共有10種分類,但每個分類的張數很多,訓練10個 epochs 就將盡需要花費一個小時。

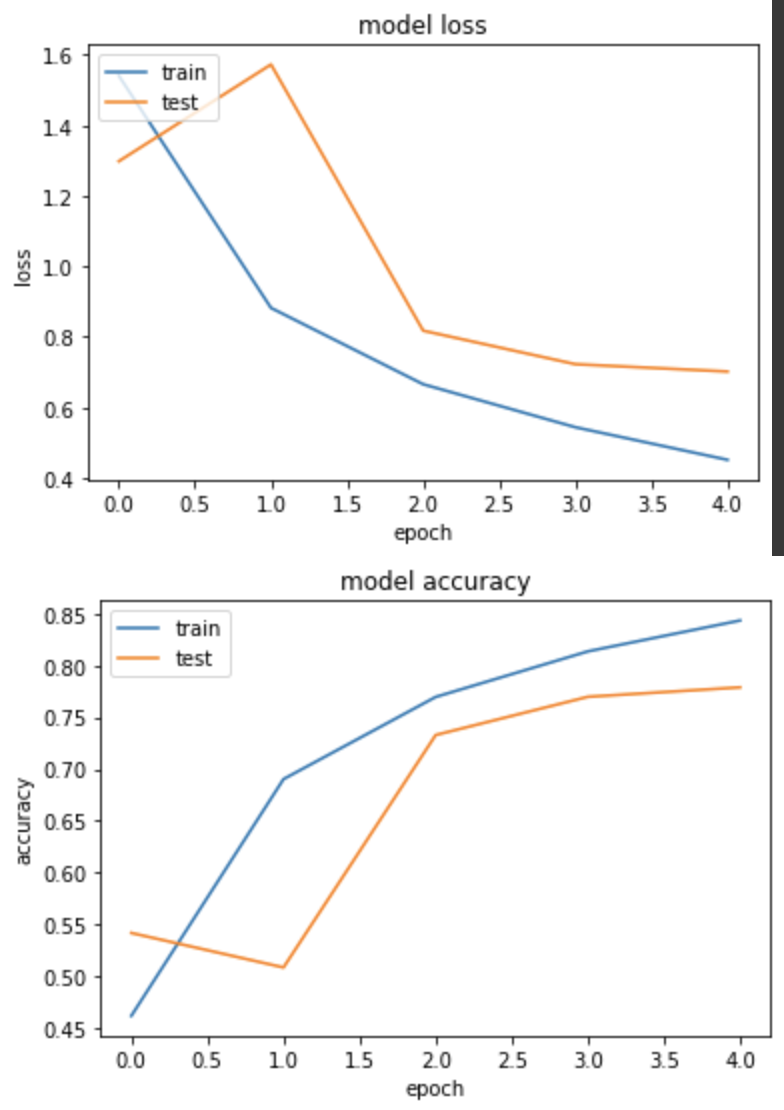

實驗一,RandomNormal

這個初始方式指定平均和標準差之後,便會在該數值間產生對應的常態分佈。

WEIGHT_INIT='random_normal'

input_node, net = get_mobilenetV2_relu((224,224,3), WEIGHT_INIT)

net = tf.keras.layers.GlobalAveragePooling2D()(net)

net = tf.keras.layers.Dense(NUM_OF_CLASS)(net)

model = tf.keras.Model(inputs=[input_node], outputs=[net])

model.compile(

optimizer=tf.keras.optimizers.SGD(LR),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

rdn_history = model.fit(

ds_train,

epochs=EPOCHS,

validation_data=ds_test,

verbose=True)

產出:

loss: 0.4510 - sparse_categorical_accuracy: 0.8434 - val_loss: 0.7016 - val_sparse_categorical_accuracy: 0.7788

實驗二,GlorotNormal (Xavier)

第一次看到這個名稱有點陌生,但查了之後才知道其實就是 Xavier 初始化,這篇文章舉了一個很棒的例子,正向傳播時,假設我們的輸入都是1,如果我們原本用 RandomNormal 產生了 mean=0, std=1 的亂數,那經過這層 layer 得到的輸出為 z,這個 z 的 mean仍然會是0,但是std會大於1,為了壓低變異數,我們會把原先的初始化範圍再縮小 1/sqrt(n) 倍。

WEIGHT_INIT='glorot_normal'

input_node, net = get_mobilenetV2_relu((224,224,3), WEIGHT_INIT)

net = tf.keras.layers.GlobalAveragePooling2D()(net)

net = tf.keras.layers.Dense(NUM_OF_CLASS)(net)

model = tf.keras.Model(inputs=[input_node], outputs=[net])

model.compile(

optimizer=tf.keras.optimizers.SGD(LR),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

glorot_history = model.fit(

ds_train,

epochs=EPOCHS,

validation_data=ds_test,

verbose=True)

產出:

loss: 0.4442 - sparse_categorical_accuracy: 0.8459 - val_loss: 0.5632 - val_sparse_categorical_accuracy: 0.8206

使用 Xavier 拿到比實驗一略好的結果。

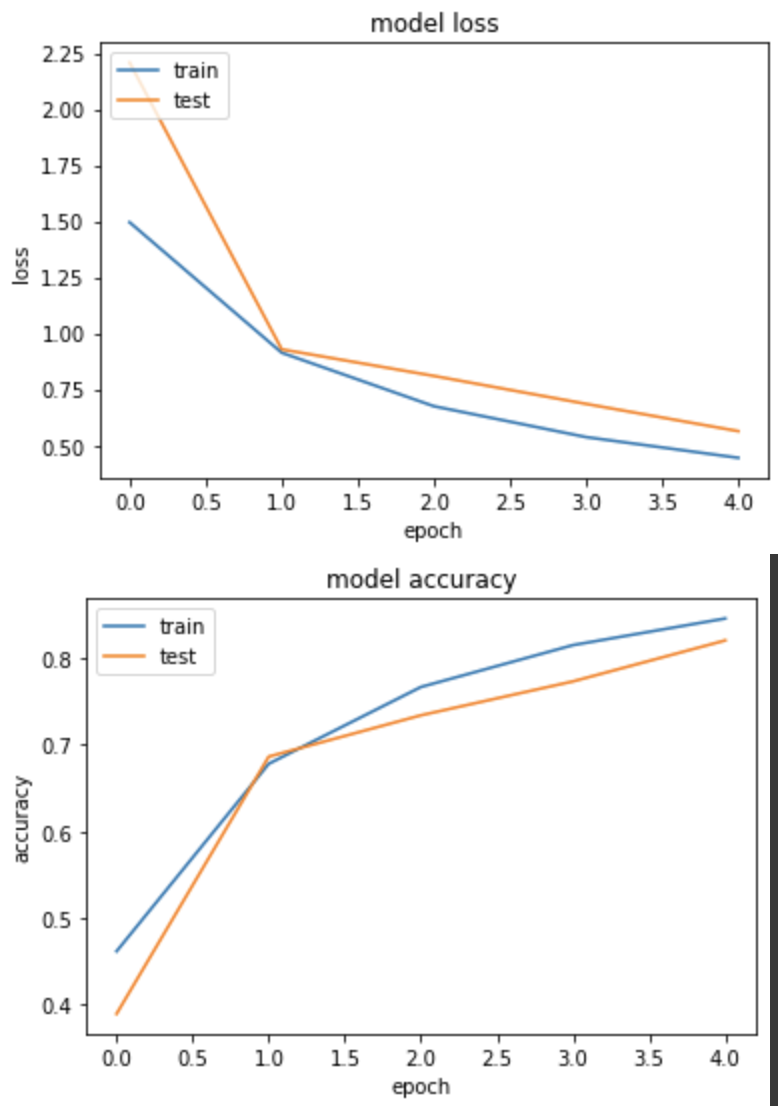

實驗三,HeNormal

由於目前主流的 Act function 幾乎都使用 ReLU,而 ReLU 的微分不是0就是一個正數,變異數是 Xavier 的2倍。

WEIGHT_INIT='he_normal'

input_node, net = get_mobilenetV2_relu((224,224,3), WEIGHT_INIT)

net = tf.keras.layers.GlobalAveragePooling2D()(net)

net = tf.keras.layers.Dense(NUM_OF_CLASS)(net)

model = tf.keras.Model(inputs=[input_node], outputs=[net])

model.compile(

optimizer=tf.keras.optimizers.SGD(LR),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

he_history = model.fit(

ds_train,

epochs=EPOCHS,

validation_data=ds_test,

verbose=True)

產出:

loss: 0.4585 - sparse_categorical_accuracy: 0.8408 - val_loss: 0.8109 - val_sparse_categorical_accuracy: 0.7348

但結果仍輸給 Xavier。

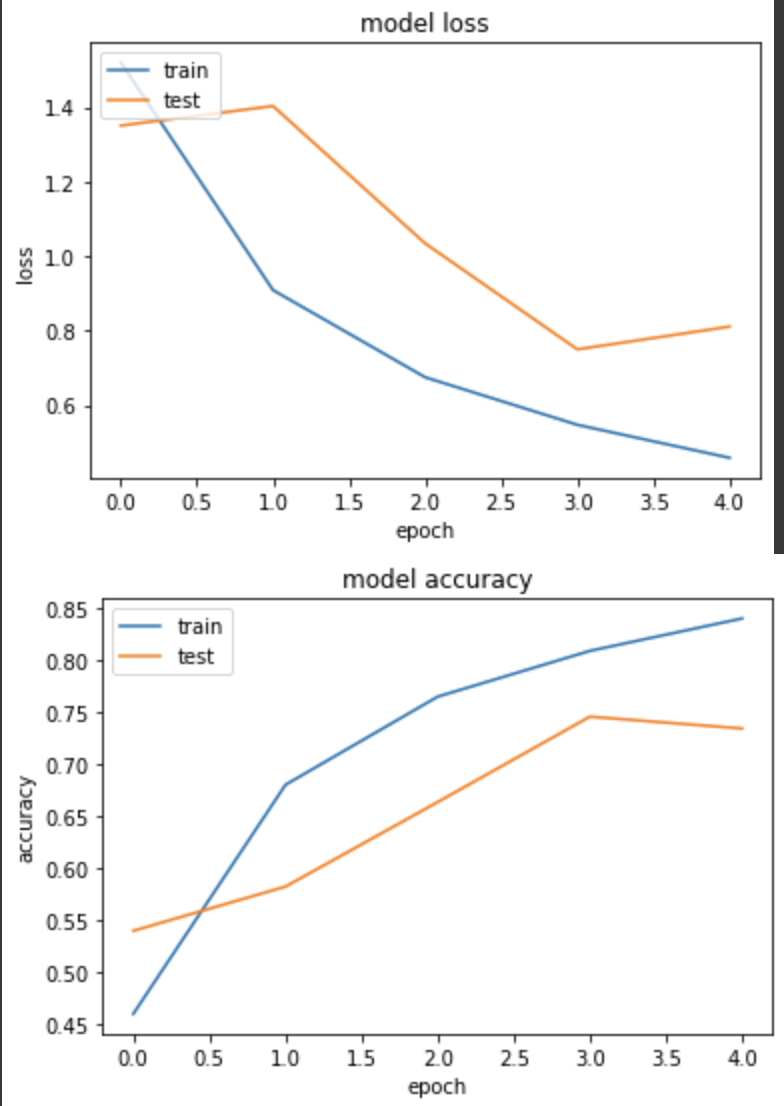

實驗四,LecunNormal

在 ReLU 問世後,接著有其他各種變形的 ReLU 相繼出現,其中 SELU 也是其一,而且發現使用 SELU 時,權重初始化搭配 LecunNormal 使用時,效果特別不錯。

而為了 Demo,我將原先 mobilenetV2 的 Act func 替換成了 SELU 來測試。

def bottleneck(net, filters, out_ch, strides, weight_init, shortcut=True, zero_pad=False):

padding = 'valid' if zero_pad else 'same'

shortcut_net = net

net = tf.keras.layers.Conv2D(filters * 6, 1, use_bias=False, padding='same', kernel_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.activations.selu(net)

if zero_pad:

net = tf.keras.layers.ZeroPadding2D(padding=((0, 1), (0, 1)))(net)

net = tf.keras.layers.DepthwiseConv2D(3, strides=strides, use_bias=False, padding=padding, depthwise_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.activations.selu(net)

net = tf.keras.layers.Conv2D(out_ch, 1, use_bias=False, padding='same', kernel_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

if shortcut:

net = tf.keras.layers.Add()([net, shortcut_net])

return net

def get_mobilenetV2_selu(shape, weight_init):

input_node = tf.keras.layers.Input(shape=shape)

net = tf.keras.layers.Conv2D(32, 3, (2, 2), use_bias=False, padding='same', kernel_initializer=weight_init)(input_node)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.activations.selu(net)

net = tf.keras.layers.DepthwiseConv2D(3, use_bias=False, padding='same', depthwise_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.activations.selu(net)

net = tf.keras.layers.Conv2D(16, 1, use_bias=False, padding='same', kernel_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = bottleneck(net, 16, 24, (2, 2), weight_init, shortcut=False, zero_pad=True) # block_1

net = bottleneck(net, 24, 24, (1, 1), weight_init, shortcut=True) # block_2

net = bottleneck(net, 24, 32, (2, 2), weight_init, shortcut=False, zero_pad=True) # block_3

net = bottleneck(net, 32, 32, (1, 1), weight_init, shortcut=True) # block_4

net = bottleneck(net, 32, 32, (1, 1), weight_init, shortcut=True) # block_5

net = bottleneck(net, 32, 64, (2, 2), weight_init, shortcut=False, zero_pad=True) # block_6

net = bottleneck(net, 64, 64, (1, 1), weight_init, shortcut=True) # block_7

net = bottleneck(net, 64, 64, (1, 1), weight_init, shortcut=True) # block_8

net = bottleneck(net, 64, 64, (1, 1), weight_init, shortcut=True) # block_9

net = bottleneck(net, 64, 96, (1, 1), weight_init, shortcut=False) # block_10

net = bottleneck(net, 96, 96, (1, 1), weight_init, shortcut=True) # block_11

net = bottleneck(net, 96, 96, (1, 1), weight_init, shortcut=True) # block_12

net = bottleneck(net, 96, 160, (2, 2), weight_init, shortcut=False, zero_pad=True) # block_13

net = bottleneck(net, 160, 160, (1, 1), weight_init, shortcut=True) # block_14

net = bottleneck(net, 160, 160, (1, 1), weight_init, shortcut=True) # block_15

net = bottleneck(net, 160, 320, (1, 1), weight_init, shortcut=False) # block_16

net = tf.keras.layers.Conv2D(1280, 1, use_bias=False, padding='same', kernel_initializer=weight_init)(net)

net = tf.keras.layers.BatchNormalization()(net)

net = tf.keras.activations.selu(net)

return input_node, net

訓練

WEIGHT_INIT='lecun_normal'

input_node, net = get_mobilenetV2_selu((224,224,3), WEIGHT_INIT)

net = tf.keras.layers.GlobalAveragePooling2D()(net)

net = tf.keras.layers.Dense(NUM_OF_CLASS)(net)

model = tf.keras.Model(inputs=[input_node], outputs=[net])

model.compile(

optimizer=tf.keras.optimizers.SGD(LR),

loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics=[tf.keras.metrics.SparseCategoricalAccuracy()],

)

lecun_history = model.fit(

ds_train,

epochs=EPOCHS,

validation_data=ds_test,

verbose=True)

產出:

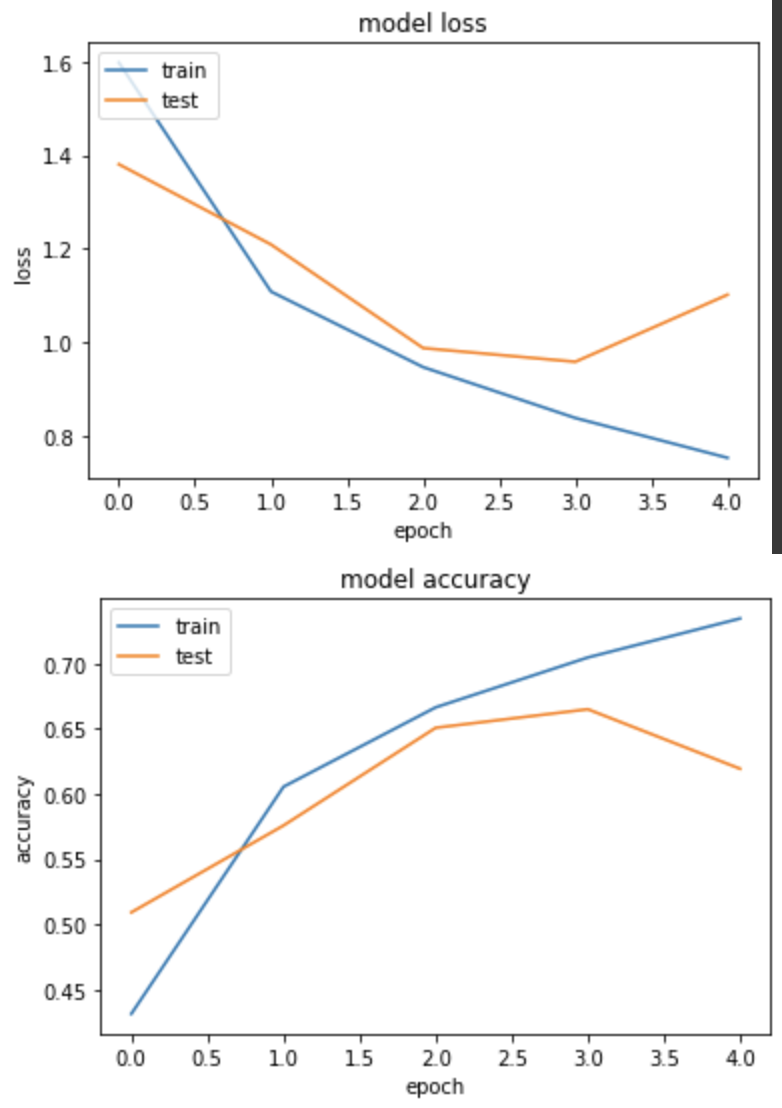

loss: 0.7519 - sparse_categorical_accuracy: 0.7345 - val_loss: 1.1013 - val_sparse_categorical_accuracy: 0.6193

但結果沒有訓練出比較好的模型...

結論:

儘管上述每個實驗的準確度差異有些大,蠻有可能是因為我 epoch 只設5個關係,但可以看到模型都有確實在收斂學習中。

最後整理一下理想的 Act func 對應的權重初始化方式: